Ok. I am finally moving from Docker Compose to Docker Stack. It has been a while since I updated my Docker environment and I am very happy with the direction that docker has moved. They have moved in the direction that I am personally have been promoting. Check out my blog on Services in Multiple environments.

Multiple Environment Development.

Swarm Concepts

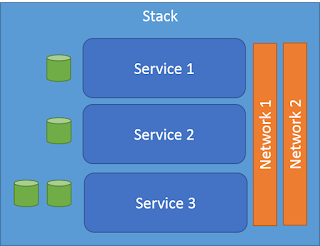

The first thing I did was read up on the changes in concepts between compose and stack. Docker introduced new concepts of Stack, Service and Task. The easiest way to think of it is a Stack consists of several services, networks and volumes. A Stack can represent a complex application that has multiple services.

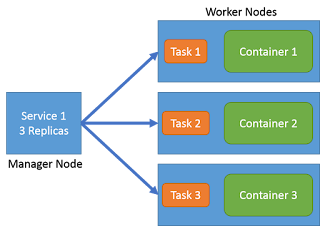

A Service can have a set or replicas that consists of a image running in a container and tasks that are run on the container. A Service has State. This is where things are different between compose and stack. Compose launches all of the containers and runs tasks and then forgets about it. Stack can keep track of the state of the containers even after they have been launched. This means if a container that correlates with a service goes down it will launch another one in its place based on policies. Basically your application will be kept up by Swarm. Built in HA, load balancing and Business continuity.

When you specify a service you specify:

- the port where the swarm will make the service available outside the swarm

- an overlay network for the service to connect to other services in the swarm

- CPU and memory limits and reservations

- a rolling update policy

- the number of replicas of the image to run in the swarm

Notice the word Swarm here. You must have a docker swarm before you use services and stacks.

Practical differences

Compose files can be used for Stack deployments. But there are a couple of things to watch out for.

- "buid" is not supported in stack you have to build with docker build

- "external_links" is not supported in stack this is covered by links and external hosts.

- "env_file" is not supported in stack you have to specify each environment variable with "environment"

Wow! That was a problem for me because my compose file had build directives for my project and had env_file to pass in environment variables. Now I had to make changes to make things work the way before.

"build" Alternative

Simply put stack services only take images. So that means that you must build your image before you deploy or update your stack. So instead of just specifying the build in the service definition you must call docker build before calling docker stack deploy.

File: docker-compose.yaml

etsy-web:

build: .

expose:

- 80

- 8080

- 1337

links:

- etsy-mongo

- etsy-redis

ports:

- "1337:1337"

- "80:80"

command: npm start

And to launch my containers then I just call

# docker-compose up

To make this work properly we need to remove the build line above. And replace it with an image key.

etsy-web:

image: etsy-web

expose:

- 80

- 8080

- 1337

links:

- etsy-mongo

- etsy-redis

ports:

- "1337:1337"

- "80:80"

command: npm start

Then you have to build the etsy-web image first and then deploy the stack

# docker build .

# docker stack deploy --compose-file docker-compose.yaml

So it is that easy. Change one key in your yaml file and you can be up and running.

env_file alternative

With stack you specify the environments using the environment tag.

This can be using dictionary or array formats.

Dictionary

environment:

PASSWORD: qwerty

USERNAME: admin

or Array

environment:

- PASSWORD=qwerty

- USERNAME=admin

Also take note that the environment variables in the docker-compose.yaml file override any environment variables defined in the dockerfile for the container. Additionally environment variables can be passed into the command line when calling "docker stack". These environment variable override the environment variables in the both the docker-compose.yaml and dockerfile.yaml files.

external_links alternative

"stack" uses link and external hosts to establish service names that are looked up when the containers are launched. This is a change from before when a changes to /etc/hosts was changed for each container to establish container connectivity. See

Docker Service Deiscovery.

Benefits of Stack

Even though there are changes to some of the yaml file format and some additional command line options the benefits of having services over containers, stacks over containers is huge. I can now have a managed stack that keeps my services up and running based on policies that I have established for the each service.

DWP