With the shift from traditional Client Server Application Software to Cloud Aware Application many Software Engineers have found themselves dusting off old System Administration Books from college. With multiple services running on multiple machine or containers software engineers have to be able to manage their applications across more and more complex environments. As I have been talking to some of my customers I have found common pain points in managing these complex applications:

- Consistency between environments

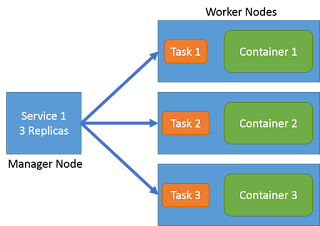

- Single point of failure services

- Differing environment requirements (Not all environments are created equal)

- Managing multiple environments across multiple clouds

All of these factors and many more can lead to time wasted, applications being released into production before their time, or worst of all unhappy software engineers.

DevOps to the rescue?

Wouldn't it be nice if the software engineer just worried about their application and its code, instead of all of the environments that it has to run on? In some places that is exactly what happens. Developers develop on their local laptops or in a development cloud and then check in their code and it moves to production. DevOps cleans up any problems with applications using single instance bottle-necked services, out of sync versions of centralized services, or adding load balancing services to the front end or back end of the application. The App developers have no clue what mess they have caused with their code changes, or a new version of service that they are using. Somehow we need to make sure that the application developer is still connected to the application architecture but disconnected from the complexity of managing multiple environments.

Single Definition Multiple Environments

Working on my Local machine

One approach that I have been looking at is having the ability to define my application as a set of service templates. In this simple example I have a simple Node JS application that uses Redis and MongoDB. If I use a yaml format. It might look something like this.

- MyApp:

- Services:

- web: NodeJS

- ports: 80

- links: mqueue, database

- mqueue: Redis

- ports: 6789

- database: MongoDB

- ports: 25678, 31502

So with this definition I would like to deploy my application on my local box, using Virtual Box. I put this yaml file in my home directory of the application. This should be very familiar to those of you that have used docker-compose. Now I should be able to launch my application on my local machine using a command similar to docker-compose.

$ caade up

After a couple of minutes my multi-service application is running on my local laptop.

I can change the application code and even make changes to the services that I need to work with.

Working in a Development Cloud

Now that I have it running on my laptop I want to make sure that I can run it in a cloud. Most organizations work with development clouds. Typically development clouds are not as big as production and test clouds but give the developer a good place to try out new code and debug problems found in production and test environments. Ideally the developer should use the same application definition and just point to another environment to launch the application.

$ caade up --env=Dev

This launches the same application in the development environment. Which could be a OpenStack, VMWare or Kubernetes based SDI solution. The developer really does not care about how the infrastructure gets provisioned, just that it is done quickly and reliably. On quick inspection we see a slight difference in the services that are running in the development cloud. There is another instance of the NodeJS service running. This comes from the service definition of the NodeJS service. The NodeJS service is defined to have multiple instance in the development cloud and only one instance in the local environment.

NodeJS.yml - Service Definition

- NodeJS:

- Local:

- web:

- image: node-3.0.2

- port: 1337

- Dev:

- web:

- image: node-3.0.2

- port: 1337

- worker:

- image: node-3.0.2

- port:1338

- cardinality: 3

- Test: …

- Prod: …

This definition is produced by the service and stack developer not the application developer. So the service can be reused by several developers and can be defined for different environments (Local, Dev, Test, & Production). This ensures that services are defined for the different requirements of the environments. For example Production NodeJS might have a NGNX load balancer on the front end of it for serving up NodeJS web services for each user logged in. The key is that this is defined for the Service

that is reused. This increases reusability and quality at the same time.

Working in the Test Cloud

Now that I have tried my application in the development cloud. It is time to run it through a series of tests before it gets pushed to production. This is just as easy for the developer as working in the development cloud.

$ caade up --env=Test

$ caade run --env=Test --exec runTestSuites

We launched the environment and then run the test suites in that environment. When the environment launches you can see additional instances of the same services we have seen before in the development cloud. Additionally, there is a new service running in the environment. The Perf Monitor Service is also running. It is monitoring the performance of the services while the tests are running. Where did the definition of this service come from? It came from the application stack definition. This definition just like the service definition can show that the application can have a different service landscape for each environment. But the software developer still sees them as the same. That is to say, code should not change based on the environment that is running the application. This decouples the application from the environment and frees up the software developer to focus on code and not environments.

What about Production

The ultimate goal of course is to get the application into production. Some organizations, the smart ones, don't let developers publish directly into production without some gates to pass thru. So instead of just calling "Caade up --env=Prod" we have a publish mechanism that versions the application, its configurations and supporting services.

$ caade publish --version=1.0.2

In this case the application is published and tagged with version 1.0.2. Once the application is published, it will then launch the environment if it is not currently running. If it is running then it will "upgrade the service" to the new version. The upgrade process will be covered in another blog. Needless to say it allows for rolling updates with minimum or no downtime. As you can see additional services have been added and some taken away from the test environment.

Happy "Coder" Happy Company

The software engineer in this story focuses on writing software not on the environment. Services are being reused from application to application. Environment requirements are being met with service and application definitions. Stack and service developers are focusing on writing services for reuse instead of fixing application developers code. Now your company can run fast and deploy quality products into production,a